This post is a lab experiment learning from migration to the Percona Xtradb Cluster (Galera) and a very unexpected DEADLOCK scenario which took me back to basics.

(root@localhost) [test]>insert into app values (1); ERROR 1213 (40001): Deadlock found when trying to get lock; try restarting transaction

Little background:

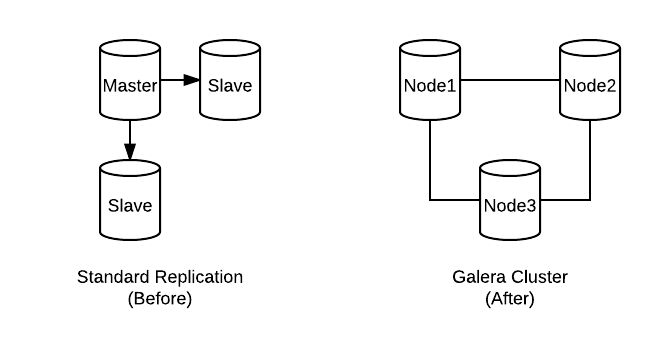

We needed to work on migrating the standard MySQL replication to a Galera (Percona Xtradb Cluster).

Previous configuration being one Master with two slaves detailed as follows:

server-1: MySQL community 5.6 serving as Master

server-2: MySQL community 5.6 serving as Slave

server-3: MySQL community 5.6 serving as Slave

We wanted to migrate above MySQL standard replication setup to Percona Xtradb Cluster (PXC 5.6) as following 3 nodes:

galera-1

galera-2

galera-3

Note that we’re not specifically reviewing the MySQL to Galera migration but only the exact deadlock issue. So let us ignore the application movement right now, assume we took downtime for app for this migration.

Let’s just understand quick steps for the migration from MySQL Community to Percona XtraDB Cluster 5.6:

– Prepare MySQL configuration for all nodes

– Migrated MySQL server-3 as galera-3 and “bootstrap-pxc”

– Set bootstrapped galera-3 node replicating from standard MySQL server-1 (Master)

– Migrated MySQL server-2 as galera-2 and joined the cluster.

– Migrated MySQL server-1 as galera-1 and joined the cluster.

– For all the above cluster migrations, we added following WSREP configuration variables to bring up PXC and left the rest of the my.cnf as it is:

wsrep_cluster_name wsrep_cluster_address wsrep_sst_method wsrep_on wsrep_provider wsrep_sst_auth wsrep_node_address wsrep_node_incoming_address

After migration to Percona Xtradb Galera Cluster it looked healthy:

– Server version from available repository:

Server version: 5.6.34-79.1-56-log Percona XtraDB Cluster (GPL), Release rel79.1, Revision 7c38350, WSREP version 26.19, wsrep_26.19 (root@localhost) [test]>show global status like 'wsrep%'; +------------------------------+-------------------------------------------------------------+ | Variable_name | Value | +------------------------------+-------------------------------------------------------------+ ... | wsrep_local_state_comment | Synced | ... | wsrep_incoming_addresses | 192.168.55.100:3306,192.168.55.101:3306,192.168.55.102:3306 | ... | wsrep_evs_state | OPERATIONAL | | wsrep_gcomm_uuid | 856defa9-f29f-11e6-bcc7-4b584f6c444a | | wsrep_cluster_conf_id | 9 | | wsrep_cluster_size | 3 | | wsrep_cluster_state_uuid | eb9fa4f3-f29d-11e6-8f1c-9eb67dae0ad5 | | wsrep_cluster_status | Primary | | wsrep_connected | ON | | wsrep_local_bf_aborts | 0 | | wsrep_local_index | 1 | | wsrep_provider_name | Galera | | wsrep_provider_vendor | Codership Oy <info@codership.com> | | wsrep_provider_version | 3.19(rb98f92f) | | wsrep_ready | ON | +------------------------------+-------------------------------------------------------------+

Now as all nodes joined the cluster, ran a CREATE TABLE which got replicated to all the nodes and relief! Cluster appears to works well. That was the time when we run an INSERT:

(root@localhost) [test]>insert into app values (1); ERROR 1213 (40001): Deadlock found when trying to get lock; try restarting transaction

It was hard to doubt that someone or something is holding on or asking for locks!

A quick look at PROCESSLIST and SHOW ENGINE INNODB STATUS resulted only to find-out nothing, no-one really doing anything!

(root@localhost) [test]>show engine innodb status\G *************************** 1. row *************************** ... ------------ TRANSACTIONS ------------ Trx id counter 8010 Purge done for trx's n:o < 8010 undo n:o < 0 state: running but idle History list length 16 LIST OF TRANSACTIONS FOR EACH SESSION: ---TRANSACTION 8005, not started MySQL thread id 8, OS thread handle 0x7fc334358700, query id 83 localhost root init show engine innodb status ---TRANSACTION 7976, not started MySQL thread id 1, OS thread handle 0x7fc344b4b700, query id 42 committed 2 --------

Well cluster was up, SYNCED, PRIMARY but INSERT is colliding head-on to the DEADLOCK. Then one more thing was attempted:

(root@localhost) [test]>alter table app add column tcol tinyint; Query OK, 0 rows affected (0.02 sec)

What? ALTER TABLE worked!! no DEADLOCK? DDL are propagating well across the cluster but DMLs are not! There is no hints in error log about anything that could point towards this issue.

Finally raised this with a sane friend, he uttered a golden question: “Didn’t you know Galera can only support binlog_format as ROW?”

He pointed me something very very basic which was missed. In Galera, only ROW binlog_format is supported for normal operation.

Remember the my.cnf change specific to Percona XtraDB Cluster:

wsrep_cluster_name = kedar wsrep_cluster_address = gcomm://192.168.55.102,192.168.55.101,192.168.55.100 wsrep_sst_method = xtrabackup-v2 wsrep_on = ON wsrep_provider = /usr/lib/galera3/libgalera_smm.so wsrep_sst_auth = sstuser:sstpass wsrep_node_address = 192.168.55.101:4567 wsrep_node_incoming_address = 192.168.55.101:3306

Note the miss, binlog_format is not added or updated in my.cnf. The original node, MySQL Server version 5.6, had configuration option binlog_format set to MIXED. Default binlog_format is STATEMENT.

To fix the issue, the binlog_format configuration option was changed to ROW, all nodes restarted and the deadlock issue vanished.

Here are few questions I raised to learn more or to hide my “ROW” guilt.

Q.1 Is this some kind of bug that it reports DEADLOCK?

If not bug at-least this is a poor error message which really diverts away from the error and should be improved.

Q.2 Why error log is not vocal about this issue?

The MySQL Server or Percona XtraDB Cluster for this matter should know the Galera limitation and alert us beforehand and even deny booting-up for an unsupported binlog_format value.

Let’s analyze some bugs or documentation around it:

Check this bug report for “wsrep_check_opts doesn’t check for default values” where it says “wsrep_check_opts only checks if the opt/value has been specified in cnf or command line explicitly.” But in our case we have binlog_format=MIXED in my.cnf and yet the node cameup clean with nothing reported in error log!

The release note says following “Default value for binlog_format is now ROW. This is done so that Percona XtraDB Cluster is not started with wrong defaults leading to non-deterministic outcomes like crash. Bug fixed #1243228.” yet it doesn’t answer the wrong (mistakenly) value of binlog_format!

This appears still as a bug and then we’d add a feature request “Check binlog_format at bootup time and fail if it is not ROW”.

Q.3 Did Percona XtraDB Cluster ever blocked for wrong values of binlog_format? Is this newly introduced?

I believe no or couldn’t yet find anything so in documentation as of yet but would very much on-side of recommending having that check! If you clearly do not support something, why to bother even coming up with that!

So I looked further on release notes and came across these lines: “…when bin-logging is enabled, replication in the Galera eco-system will happen only if BINLOG_FORMAT is set to either ROW or STATEMENT. The recommended format is ROW, while STATEMENT is required only for the pt-table-checksum tool to operate correctly. If BINLOG_FORMAT is set to MIXED, replication of events in the Galera eco-system tables will not happen even with bin-logging enabled for those tables.”

In our case we had MIXED mode enabled, ALTERs were replicating as they’ll be logged as STATEMENT and work that way… but INSERTs? They’re logged in as STATEMENT and then denied? The MIXED binlog_format defaults to STATEMENT except for individual events that it detects are non-deterministic and therefore unsafe for statement-based replication are logged as ROW.

But why DEADLOCK for INSERT? We could have gotten a better message “Did you forget binlog_format should be ROW?” 😛

Anyways description under this bug report comment is good read.

Q.4 Any present bug reports for this?

This one bug report to refer where in the node drops from the cluster, “pxc node acting as async slave fails to replicate when binlog_format=MIXED” which is very much similar to #1494399. Anyways in our case, MIXED mode replication just produced DEADLOCK for INSERTs while the cluster was working well.

Did you know we have something to answer our feature request of Q.2?

In Percona XtraDB Cluster 5.7 we have new variable pxc-strict-mode. This variable is introduced to run the validations to avoid use of experimental and unsupported features in Percona XtraDB Cluster. Good thing is that by default, pxc_strict_mode is set to ENFORCING, except if the node is acting as a standalone server or the node is bootstrapping, then pxc_strict_mode defaults to DISABLED.

You may refer more about pxc-strict-mode.

Hope this helped but always remember Galera and ROW is a team ;).

2 comments

Thank you for sharing this knowledge. I look forward to reading more.

You’re welcome. Don’t hesitate to suggest a subject.